Experiments in Machine Unlearning

On Algorithmic Curation and Museums Marginalia

Giulia Taurino

IntroductionWe walk into museums with the expectation that objects will be exposed, exhibited, explained. Conceptual itineraries, chronological paths, geolocalized tours are designed throughout galleries and exhibition spaces to guide us across collections and make sense of their narratives. Yet, artworks on view often count for a small percentage of the overall archive, made of objects, curiosities, and undervalued art that remain temporarily hidden. While the work of curators is needed to filter what would otherwise be received as a meaningless hoard of objects, questions arise on the biases of art histories based on centuries of colonialist thinking. Bearing in mind this inner limit, the curatorial work in museums has become a complex process of decolonization in search of new discoveries, invisible epistemologies and other unknowns. How can digital tools help assist this process of reframing knowledge? This essay focuses on the importance and responsibility of the curatorial process, by presenting a series of creative computational experiments with digitized museum collections including different levels of machine intervention.

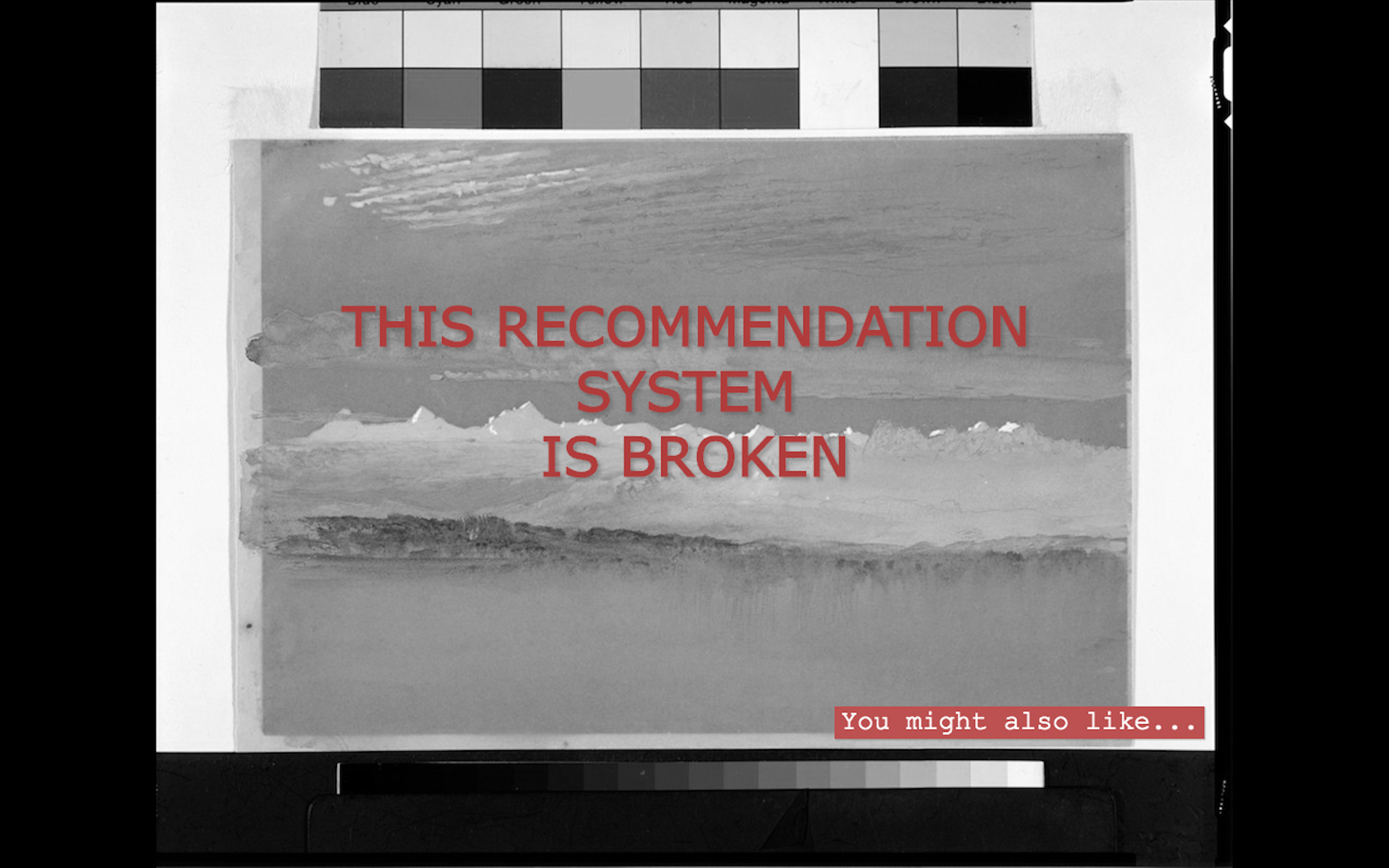

I first started reasoning on algorithmic curation as part of Curatorial A(i)gents, a series of art projects developed by members and affiliates of metaLAB (at) Harvard exploring the intersection between AI, museums data and curatorial practices at the Harvard Art Museums. My contribution to the series, This Recommendation System is Broken, originated from a reflection on how to turn forms of machine-brokenness, errors, dysfunctionalities into opportunities for rewriting the code and challenging our own cultural biases. Inspired by the unique vision of the metaLAB community, I began my own journey in research-driven art and art-driven research as a methodology built around questioning conventional perspectives, repurposing concepts, provoking alternative thought-processes. The opposite notions of vis·i·bil·i·ty / in·vis·i·bil·i·ty became focal points for a study of the ontological and phenomenological implications of different states of being seen / unseen as related to practices of looking in the museum’s space.

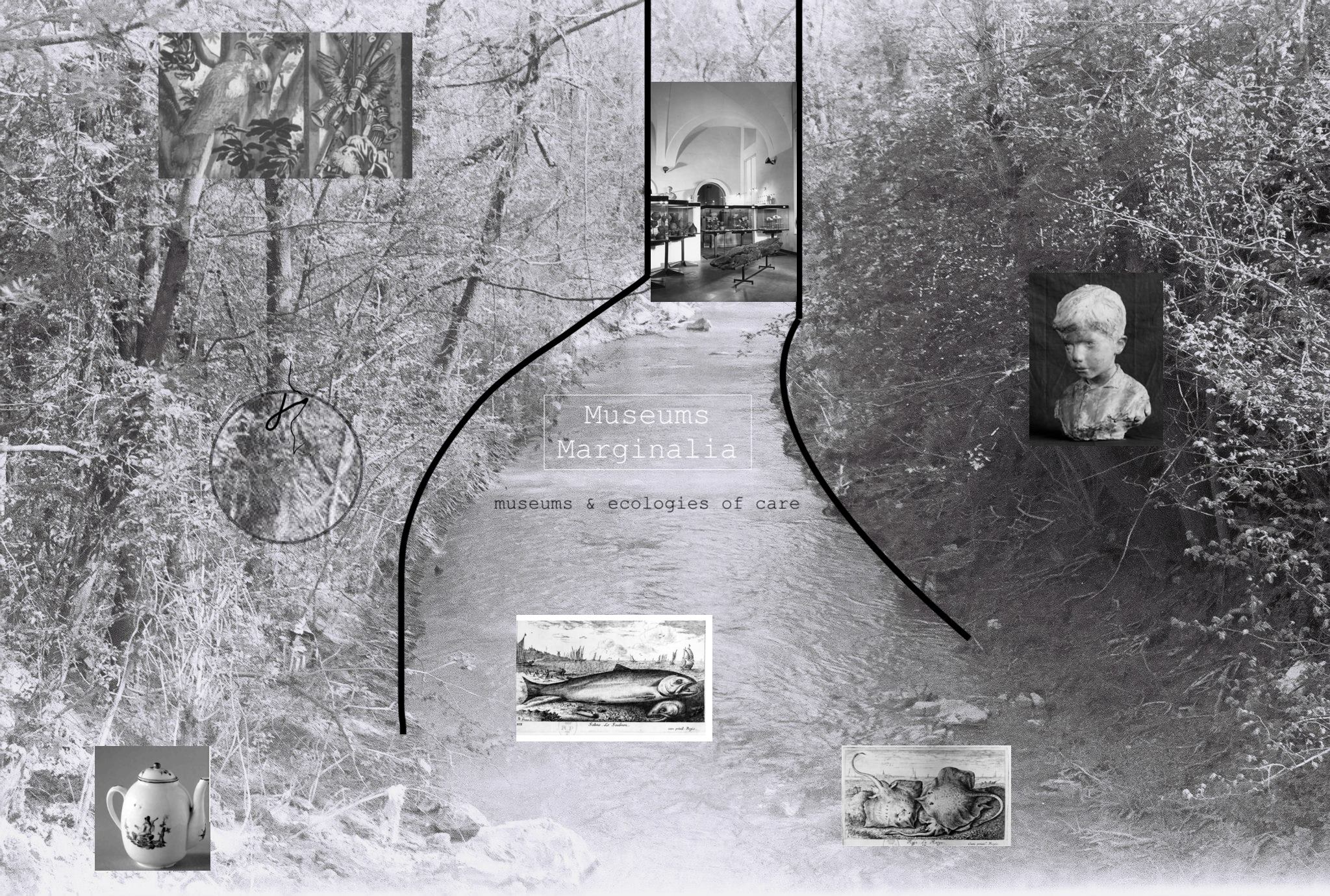

As a feminist investigation into ecologies of care and repair, This Recommendation System is Broken evolved into a second project through a series of iterations developed in collaboration with Fondazione ISI, Associazione Arteco, and local museums in Torino, Italy. Elaborated in the context of an art residency promoted by S+T+ARTS City of the Future, the project, Museums Marginalia, was born in response to the Nesta Italia Challenge “How can art reclaim the algorithm that tries to predict its success?”. Almost an algorithm of unsuccess, Museums Marginalia is an act of archival breakdown, cure, maintenance and an attempt to track invisible links between marginal objects, lost techniques, unusual materials found in multifaceted collections across several institutions. It builds upon Wendy Chun’s invitation to imagine machines that can unlearn colonial and discriminatory logics, while embracing randomness and surprise.

Considering digital media art as a tool for critical making (Ratto 2011) and speculative design (Dunne and Raby 2013), this digital essay presents two experiments that use “broken” algorithms, randomization, co-curation with the intent of decolonizing the archives and mapping missing knowledge. Working with open datasets from four different institutions, this multi-year project blends critical algorithm studies with computational art, while taking a research-creation approach to digitized museum collections. By means of creative coding, the project problematizes the duality of center-peripheries in art histories, while also imagining new potential scenarios for ethical applications of algorithms in synergy with the arts. This interactive essay will ultimately consist in a guided visual journey throughout museums marginalia found thanks to the code created for this project. By showing some of the outputs, algorithmic curation will be discussed here as both a form of speculative inquiry and a provocation, in defense of cultural sustainability, inclusive art futures, diversity in algorithmic design.

Brokenness: disruption as creative process

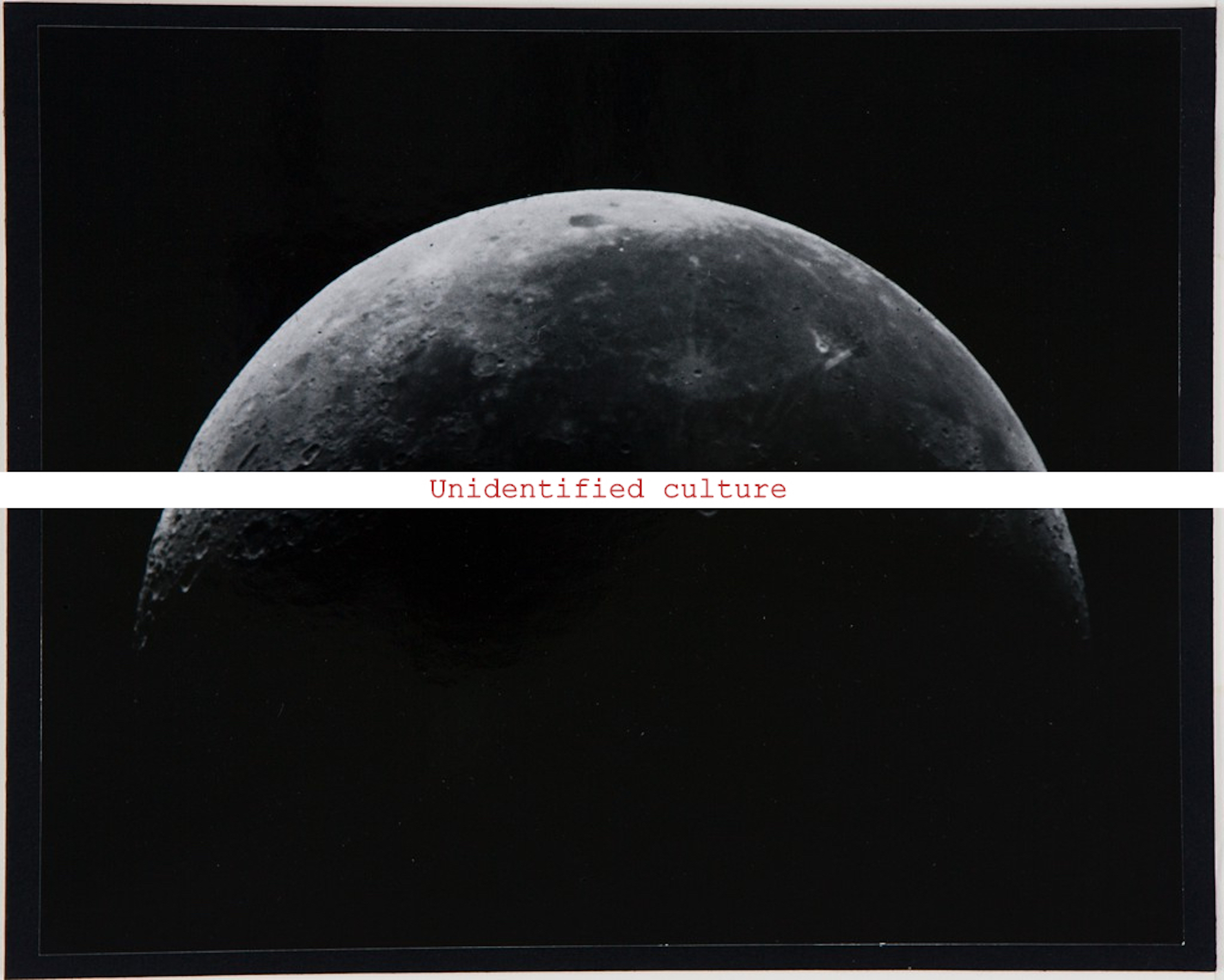

How can feminism help us reframe the technological as a mode of resistance, rather than a form of control? In her Manifesto for the Broken Machine, Professor Sarah Sharma invites us to reflect on this question, by mobilizing preexisting patriarchal notions about the arbitrary connection between broken machines and feminine dys-functions. In her own words: “In this piece, I offer a ‘feminism for the Broken Machine’ in order to account for the differential experience of being positioned within and determined by patriarchy, of being understood as a technology that does not work properly” (Sharma 172). For a long time, cyber-utopic visions of iper-efficient technological futures have been diffusing the idea that a seemingly perfect artificial intelligence will assist us in performing everyday tasks in an unprecedented way, making our lives easier, enhancing speed and power. In these distorted visions, which often neglect the systemic forms of social injustice that have been proliferating across digital media, “malfunctioning parts (nonconforming subjects) can be discarded and replaced. But the idea of our contemporary social-political-economic system as an already-broken machine full of the incompatibly queer, raced, classed, and sexed broken-down machines is politically exciting for feminism” (Sharma 173). When Sarah Sharma published this insightful piece in September 2020, I had been working on the concept of brokenness as part of my collaboration with metaLAB (at) Harvard. The resulting piece, This Recommendation System is Broken, is a critical reflection on discriminatory practices induced by most common algorithmic recommendation systems as to which media content emerges over others based on metrics measured in terms of popularity, marketing, financial interests, and, indeed, pre-existing cultural biases. This system looks at epistemologies of not knowing: incomplete, inaccurate, unknown, unidentified digital accounts.

While conducting a digital ethnographic study of streaming platforms, I found that the term “broken” frequently appeared among online communities that in 2018 were reporting a defect in YouTube’s recommendation system and errors in the stream of suggested content. Since 2018, many academic theories have been pointing at the discriminatory effects of defective algorithmic technologies. However, what I was observing was not only a phenomenon connected to issues of classism, racism, sexism, but rather the manifestation of a much more insidious social process of unlearning the unfamiliar and the unexpected, along with an overall dismissal of the responsibility involved in the act of actively making a choice. As automated recommendation systems perpetuate the formation of internet silos, echochambers and ideological biases, we seem to be slowly losing connection with the often uncomfortable and yet necessary experience of being exposed and confronted with what’s different, to unknown objects, cultures, identities and to the very process of learning itself. Perhaps more invisible than other media, but still so pervasive and performative, AI-driven recommendations are designed to influence our decision-making process, with the result of constantly outsourcing decisions to algorithmic operations. It’s not just that algorithms are used by governments and institutions to make external decisions on our personal and social lives, but they’re also used to inform our very own choices. In a capitalistic dynamic where corporate profit, quantity of content and efficiency of time are celebrated and safeguarded, it comes natural to ask whether abundance of choice is in fact the long-promised road to freedom or perhaps a burden that prevents us from educating ourselves to different options, good or bad, and from the fundamental awareness that being able to choose is still a form of privilege.

As Jonathan Cohn argues, in the history of recommendation systems and consumeristic wealth, “making a choice was framed as a ‘burden,’ while automated computer technologies became the solution. These recommendation programs taught the bourgeoisie to treat their privileges and options as a burden they could pleasurably cast off to technologies and technocrats” (Cohn 3). In this process of delegating our decisions to machines, we are training algorithms that do “not recognize a distinction between the deeply personal and the overtly communal, nor between freedom and oppression” (Cohn 26). Even more, Ravi Chakraborty (2020) underlines the pervasive effects that a pre-cybernetic illusion of order has had over the way algorithmic design evolved from simply designating a list of instructions for problem-solving in the history of mathematics, to serving mechanical computing devices following a logic of efficiency, automation, self-organization under the obsessive quest towards control and order. In the contemporary metaphysics of information ( ibidem), where algorithms govern micro-decisions on a molecular level (Cohn 2019), recommendation systems have played a controversial role in favoring a structure that privileges perfected personalizations and predictions over questions of ethics and cultural sustainability.

In attempting to challenge the status quo, together with Sarah Sharma, I ask: “What happens when the machine world no longer reciprocates man’s love and instead questions his power?” In 2019, I began experimenting with creative approaches to filtering systems, precisely by building upon their presumed brokenness and generating alternative processes for suggesting content based on randomization rather than prediction. Hence, brokenness became for me a framework to interpret errors as opportunities, with the intent not only to expose inner algorithmic biases, but also to reimagine existing epistemologies and expectations around notions of algorithms and recommendation. In this process, it became central to consider disruption as an innovative methodological approach in research-creation aiming at fostering epistemological ruptures, intended as attempts to challenge the ideological past in order to promote a dialectical method to understand techno-cultural futures.

In taking Sharma’s invitation to envision a new technological order where broken machines talk to other machines, the following art project advances several prototypes for computational models that dialogue with existing algorithmic practices, by building upon their machine brokenness to suggest that disruption can indeed act as a creative process. In this broken algorithm series, forms of brokenness and failures are meant to re-articulate already existing struggles, gaps, errors in the way we read media, arts, and technologies. Broken machines, and broken knowledge (Marchessault 2005), are presented as the key to break both algorithmic culture and art history into their multifaceted potential existences. In building a series of algorithms for exploring undervalued artifacts and lesser-known artists, I also want to echo Judith Halberstam’s concept of failure as a queer act, as a positive force that entails new modes of knowledge: “Under certain circumstances failing, losing, forgetting, unmaking, undoing, unbecoming, not knowing may in fact offer more creative, more cooperative, more surprising ways of being in the world” (Halberstam 2-3).

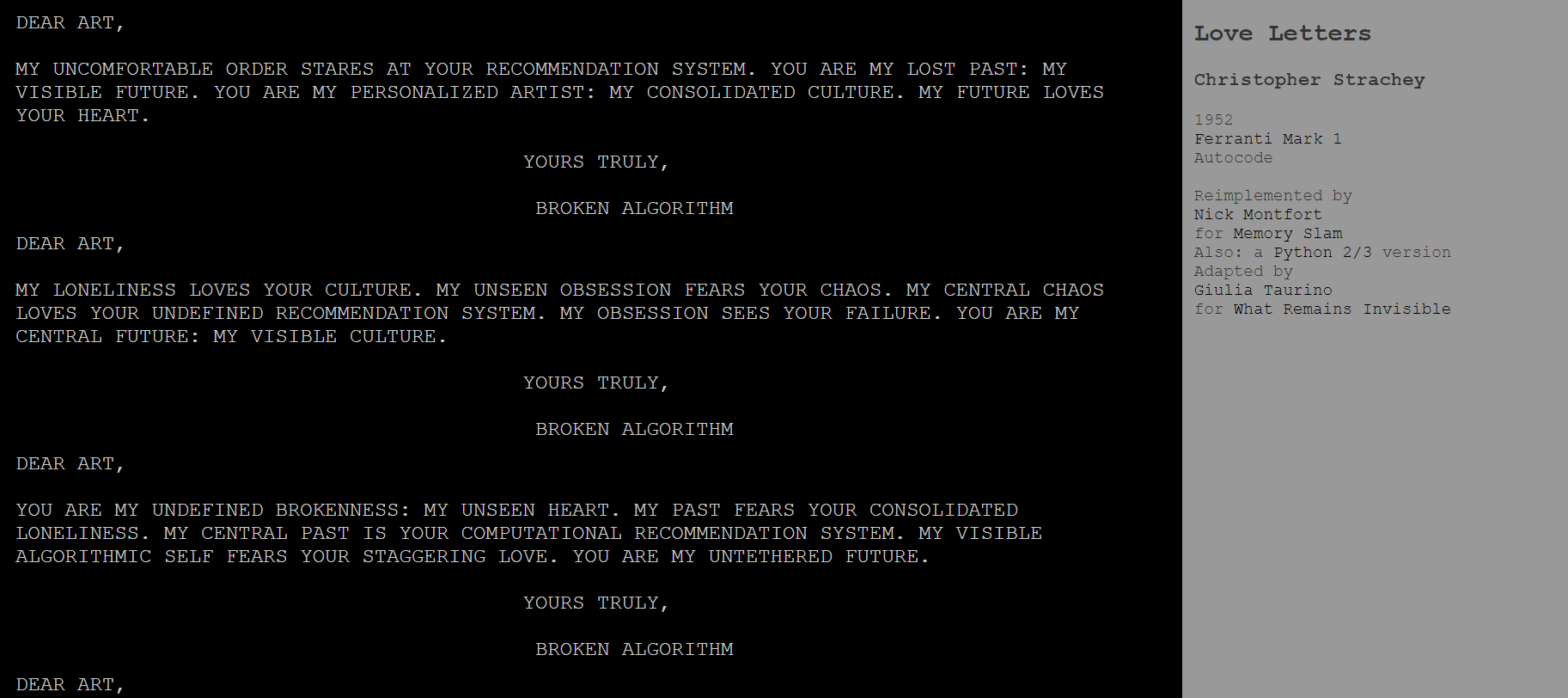

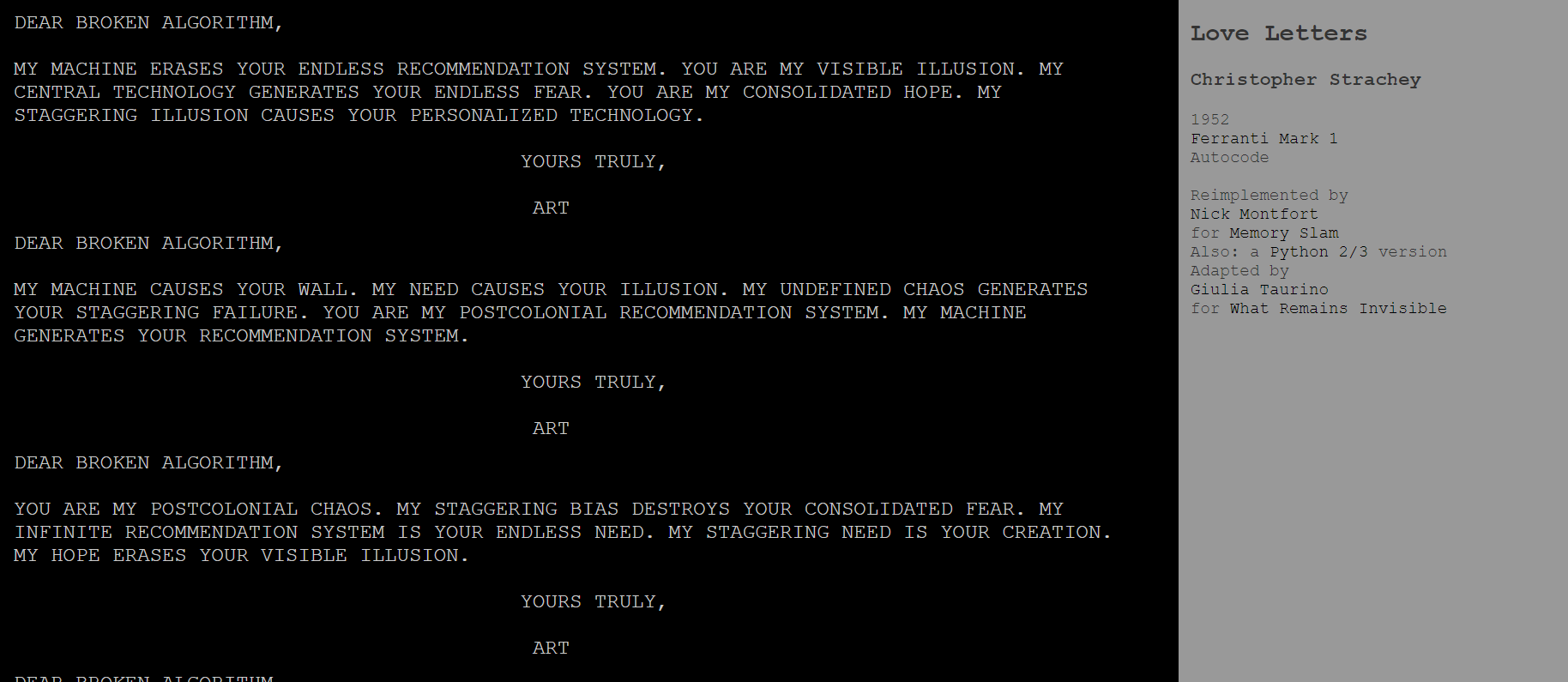

In this attempt to purposely break and queer recommendation systems, I adapted Christopher Strachey’s combinatory love letter algorithm (as reimplemented by Nick Montfort for Memory Slam) to narrate an imaginary love story between a broken algorithm and art. Dear/Yours Truly Broken Algorithm is a generative exercise, a variation on Stratchey’s critique of heteronormative expressions of love that accompanied my computational explorations throughout the concept and practice of algorithmic co-curation. It’s an iterative series of epistolary exchanges and the beginning of a platonic relationship that makes art the center of a machine's desires.

Invisible Objects, Invisible Connections: lost techniques and undervalued materials

A focus on invisibility as both the cause and the result of missing connections between peripheral artworks has shifted this second experimental project in the “broken algorithm” series towards a new fascinating vision, where the concepts of visibility and invisibility are tied to invisible links, connections, networks among objects, rather than solely to mechanisms of isolation of single items. This change brings several paths to explore: while it takes distance from the previous project in the computational art series (i.e. This Recommendation System is Broken, from Curatorial A(i)gents, metaLAB (at) Harvard), it also helps us problematize and complexify the very notion of invisibility, as deeply intertwined with the notions of network and hierarchy in a system profoundly biased towards white and male artists.

Russell Ferguson (1990) describes the compresence of whiteness and maleness as “an invisible center”, accepted as the unspoken assumption of universal human traits, hard to pinpoint and yet ever recurrent, ever the same in this global process of cultural marginalization that has been affecting both past and contemporary human history. However, invisibility as an imposed reality, rather than a choice to hide the specific biases of dominant authority, is also and most often the state of marginalized communities that exist outside of the white, male, rich and powerful center. From our past to our future, invisibility is transitioning to algorithmic culture in insidious ways. As Caroline Criado Perez has powerfully stated in her book, Invisible Women: Data Bias in a World Designed for Men, “the presumption that what is male is universal is a direct consequence of the gender data gap. Whiteness and maleness can only go without saying because most other identities never get said at all. But male universality is also a cause of the gender data gap: because women aren't seen and aren't remembered, because male data makes up the majority of what we know, what is male comes to be seen as universal. It leads to the positioning of women, half the global population, as a minority. With a niche identity and subjective point of view. In such a framing, women are set up to be forgettable. Ignorable. Dispensable - from culture, from history, and from data. And so, women become invisible” (Criado-Perez 21).

Inevitably, this hierarchy has reflected in our cultural and artistic heritage, museums still being spaces of conflict between discriminatory practices and the hope of preserving a shared cultural memory. The need for making marginalia the center of inquiry turned my project into a search for lost techniques and undervalued materials, across groups of widely available objects, usually associated with labor and for a long time categorized as low art or confined to the separate field of crafts. Musei Reali, together with Fondazione Torino Musei (with its open datasets from Galleria Civica d’Arte Moderna e Contemporanea, Museo d’Arte Orientale, Palazzo Madama) have been an important source of inspiration in developing my work on algorithms of invisibility, failure, unsuccess at the intersection of arts and technology. While directly attached to the city’s ducal and royal history, the institutional history of each one of these museums is made of an assemblage of separate collections with their own epistemological contributions. Starting from museums’ past and present, I tackled invisibility as the status of artworks existing at the margins of the binomial structure center versus periphery, as inherited from male-centric, colonialist perspectives. Through a series of experiments based on “guided” randomization, I attempted to broaden the periphery into a system of its own, made of unpredictable connections and unexpected curiosities.

Museums Marginalia

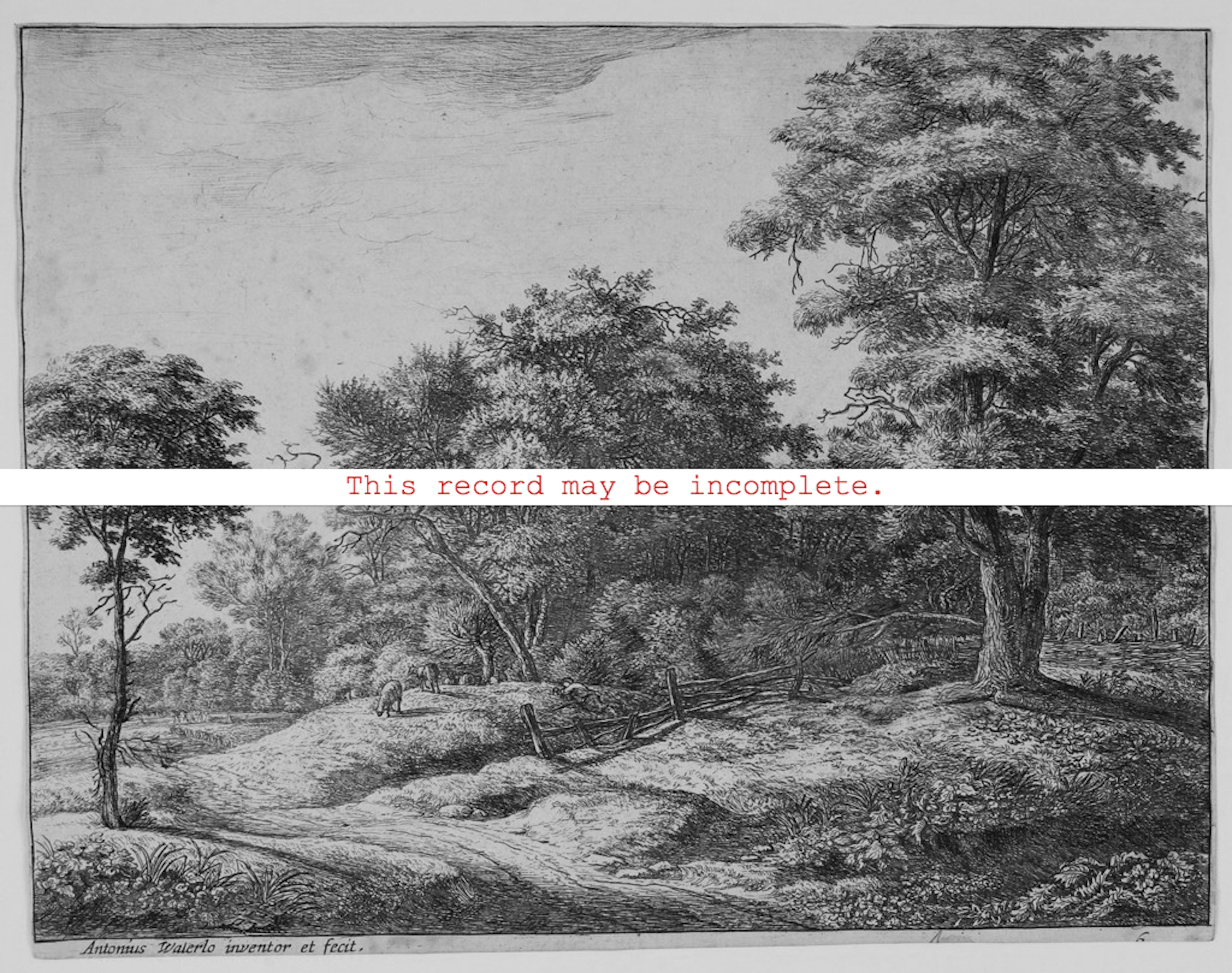

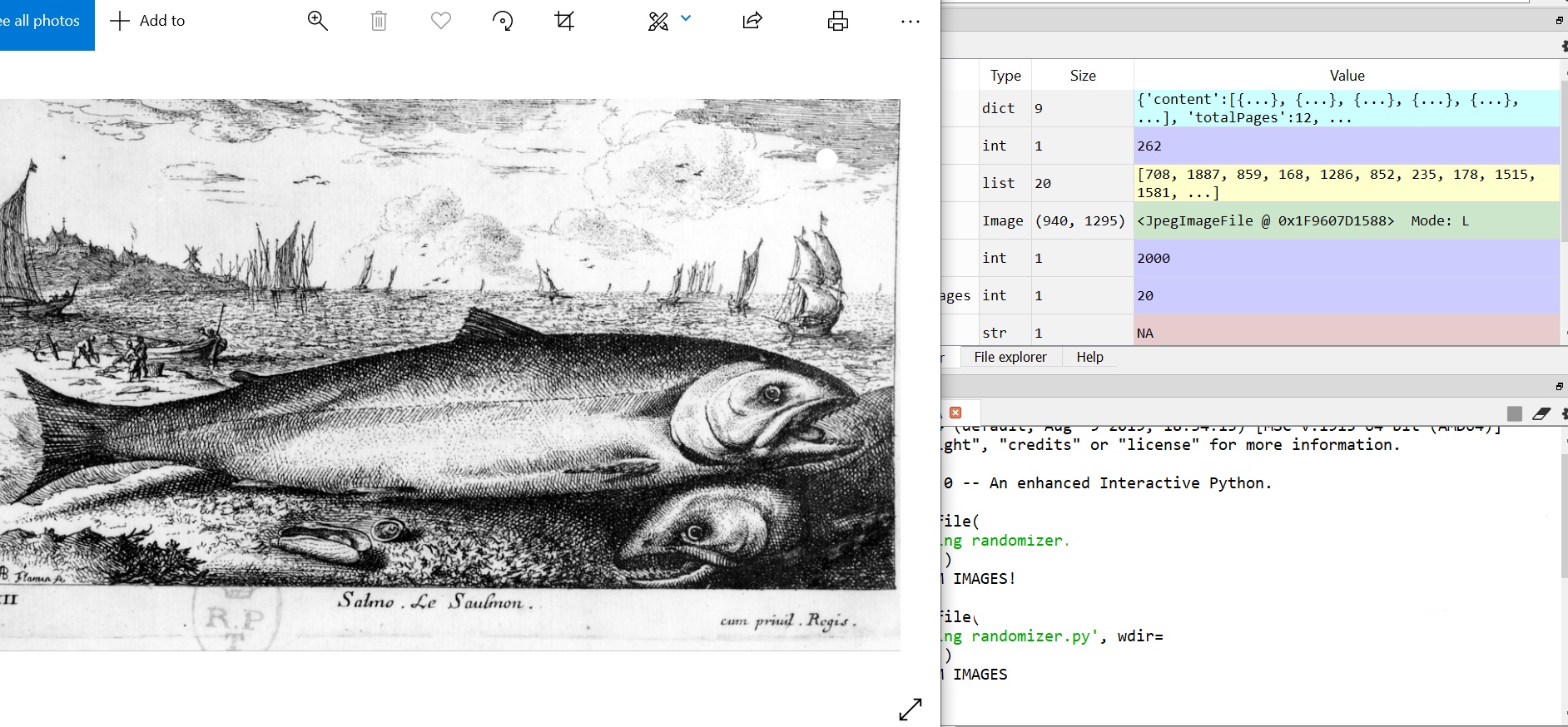

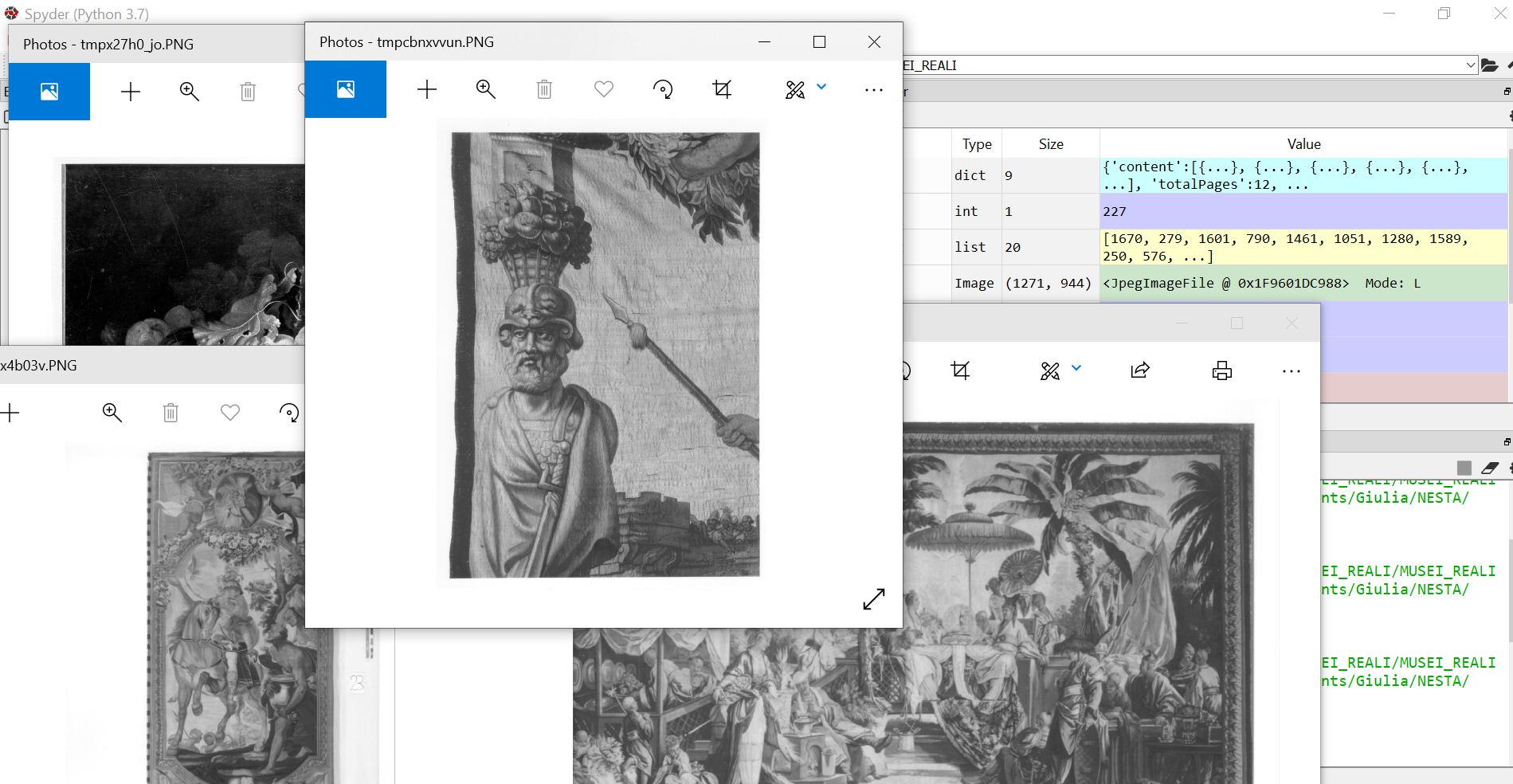

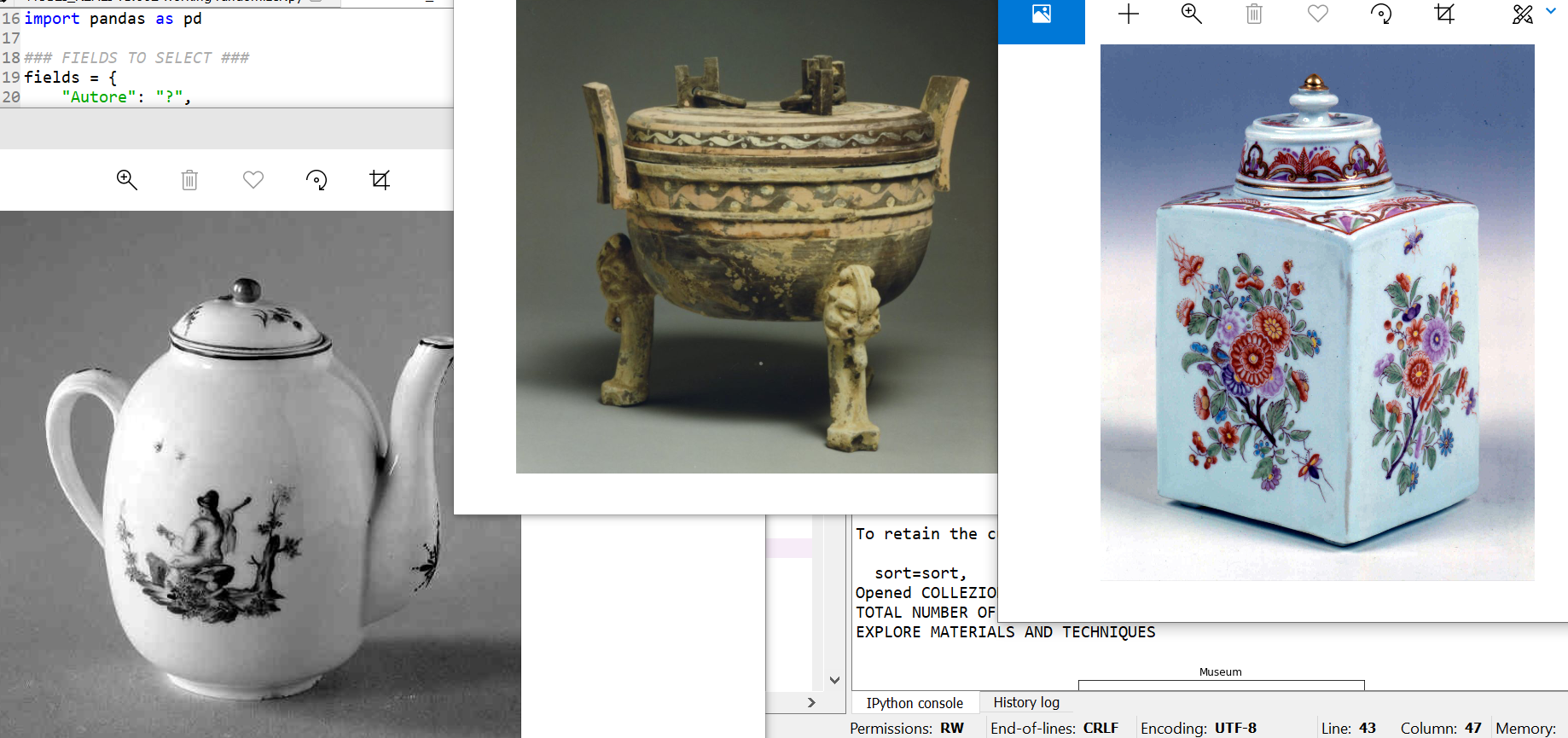

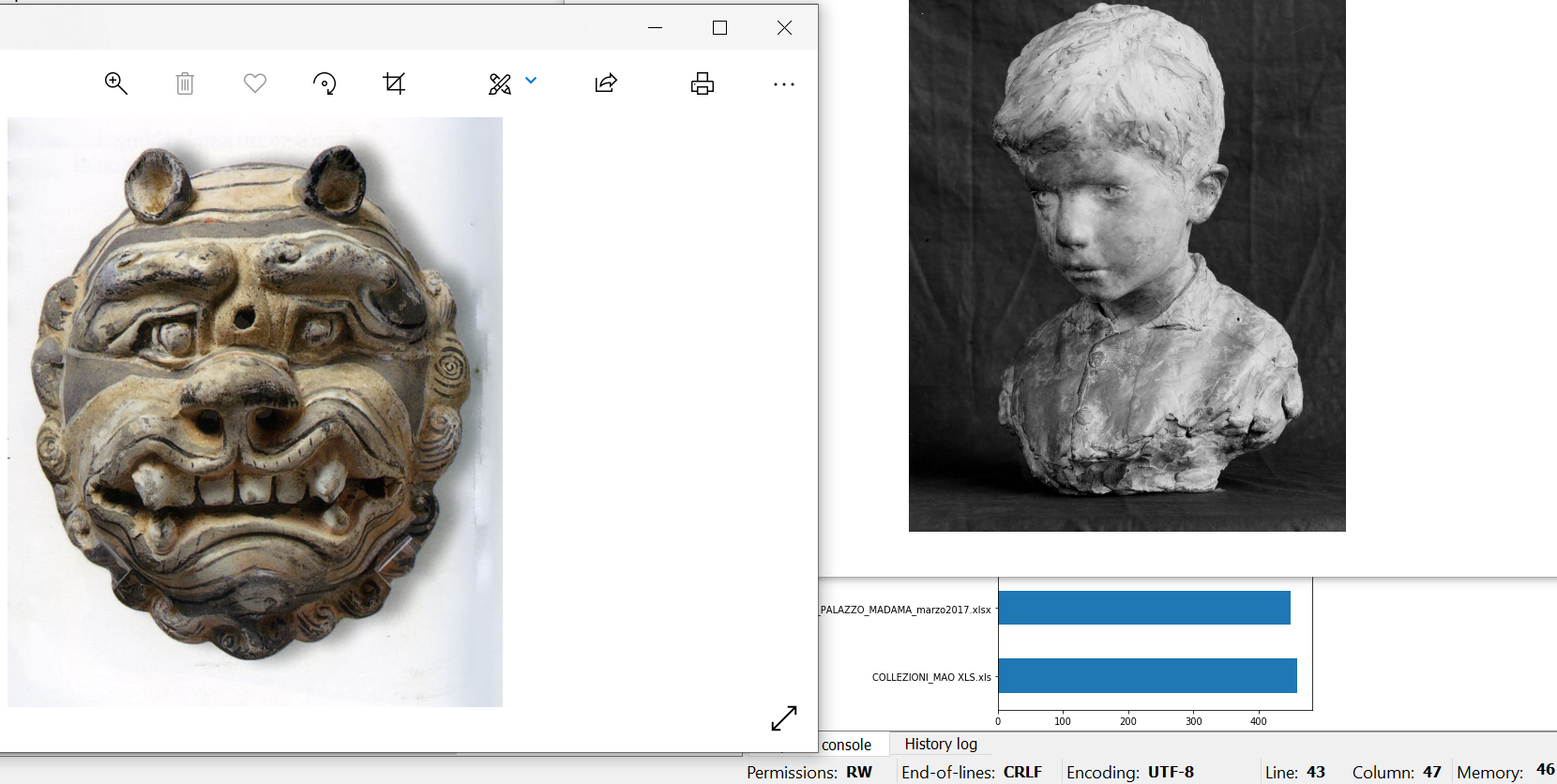

The first iteration of a prototype tentatively called Museums Marginalia, one that would focus on artworks that remain invisible, at the margins of archives and in the background of museum galleries, was based on a sample dataset made available by Musei Reali thanks to the partnership Associazione Arteco. This subset of a much larger collection comprises 2000 black and white images with no metadata, meaning that no human curated classification was applied to the data beyond the digitization process. The result of appling the code to such a dataset is a “blind” randomizer that selects images based on an entirely computational curatorial process. This example of algorithmic curation draws an arbitrary path through a miscellanea of seemingly unrelated items, curiosities, artworks hidden in an institutional storage. A variety of techniques and materials blend together in a continuous process of discovery and exploration of unexpected objects.

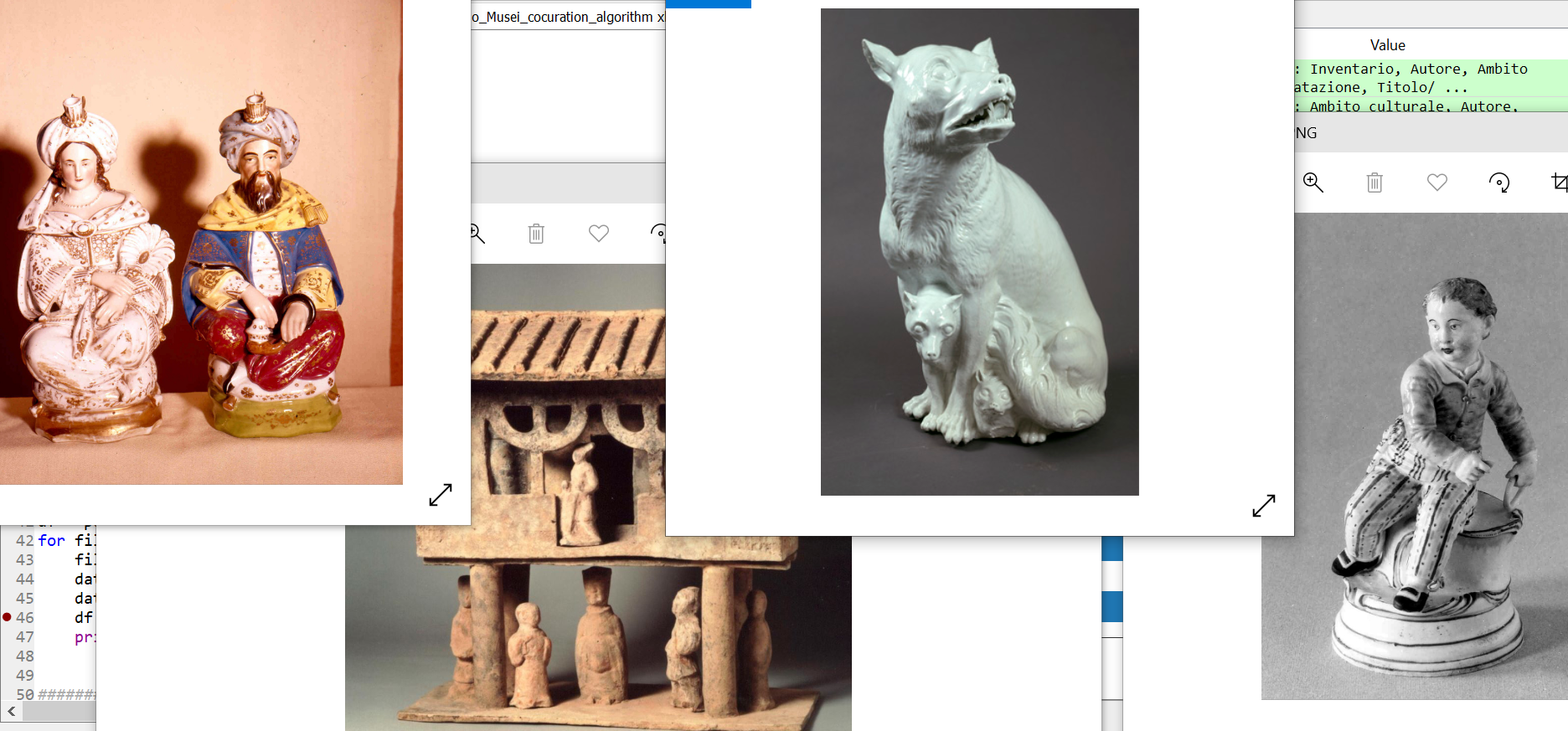

The first prototype shows a machine-led curatorial process based on automated random selection of digitized images. With the second iteration, the intent was to further complexity this concept to create a process of algorithmic co-curation based on human-machine collaboration in filtering items based on a series of elements and descriptors priorly curated. Together with Associazione Arteco (cultural partner) and Fondazione ISI (scientific partner), we were able to find a larger corpus of digitized images from three museums - Galleria Civica d’Arte Moderna e Contemporanea, Museo d’Arte Orientale, Palazzo Madama - as part of an open data initiative by Fondazione Torino Musei. Each of these institutions created freely accessible and reusable datasets containing a human-curated catalog with data and metadata about a variety of objects. The data include information about inventory, author, cultural provenance, date, title/subject, materials/techniques, and a URL reference to the image. Museums Marginalia - iteration n. 2 originated from the collaborative work of curators that have catalogued objects, and evolved into a thorough study of the data and metadata available, with attention for information relative to materials and techniques traditionally associated with low art, crafts, labour.

This research led to a path of learning and unlearning the ways we traditionally associate handcrafted items for daily use with a lower form of art. Artisanal work comprises materials like linen, cotton, silk, along with paper, porcelain, clay, iron. These artworks hidden in the museum inventory are things meant to be worn, walked on, sat on, even drank from. They are meant to be used. Textiles and clay in particular caught our interest, as they have become symbols of craftsmanship for minorities and communities of women across several cultures. These materials were the source of inspiration for imagining an algorithm that brings to the surface undervalued objects and connects them across collections including artworks from different eras, geographic locations, cultural origins. In many traditions, needlecraft and ceramic carving have been a place for making decorations, ornaments as much as daily-use utensils, showing that art is in fact useful, it is a need, both in its making and in its use. Guided by human curation, in the curated dataset as much as in its very design, the following screenshots show an algorithm coded to help humans co-create with machines. In a moment when the digital often directs our practices, actions and interactions, this computational art project aims at reconnecting us with the analog.

Conclusion

Open-data frameworks offer unique opportunities to start reasoning on human-algorithmic co-curation in the context of cross-institutional collaboration. Instead of building upon biased imaginaries that reinforce cultural hierarchies and feed into a process of recommendation based on success, Museums Marginalia presents a computational approach to co-curating art collections, one that is ethical, educational and collaborative in its design. It is ethical in the way it gives visibility to undervalued or under-researched artworks. Moreover, it serves educational purposes as it aims at exploring a feminist pedagogy by understanding the limits of our own institutional and cultural biases. Finally, it is collaborative in that it creates an algorithm that promotes communication between several institutions in synergy with - and not in contrast or as an alternative to - human curators.

The museums that granted access to the datasets for this project (Musei Reali, Galleria Civica d’Arte Moderna e Contemporanea, Museo d’Arte Orientale, Palazzo Madama) comprise heterogeneous archives coming with a vast ensemble of digitized artworks, but they are also most importantly physical spaces filled with fabrics, embroideries, vases and other often forgotten objects. Paintings and sculptures catch most of our attention, while objects classified as crafts generate a narrative of their own, built upon invisible hands, with unknown artists and limited information about their work. The idea of a feminist, broken machine proved to be effective to address and challenge outdated conceptions of high versus low arts that mimic a simplified, binomial structure of power based on oppositions (e.g. center/periphery, male/female, white/black). Broken algorithms and low arts meet in this artistic experiment to generate a digital patchwork of objects confined in the background or found at the margins of the museum experience.

Museums Marginalia invites museum-goers to understand both the physical and imaginary margins that we constructed through a series of cultural definitions and arbitrary standards. It asks us to look at used, unfinished, uninviting objects. In this process of learning the unfamiliar and unknown, the algorithmic co-curation draws a network of abstract and material associations between different artistic traditions outside of the most renowned and successful art scenes. Underestimated and under-research, we discovered objects connected to a still untold art history made of art practices coexisting with forms of labour. For many female artists, such objects provided opportunities to break the boundaries of domestic and industrial crafts, turning activities like weaving or pottery-making into ways to rehabilitate non-dominant or marginalized knowledge and build communities.

Some of the pottery art selected by the algorithm created for the second iteration is rooted in traditional practices found across different countries and intertwined with a deeply physical experience in the way it relates to daily life activities like cooking or eating. Similarly, the project presented here uses coding as a practice that can be creative, experimental, critical, and at the same time necessary to exist and operate in our own increasingly digital routines. In a way, this computational art project is itself a craft, a tool, an artwork intended to be used, manipulated, broken in order to explore invisible histories of computing and the arts.

Works Cited

Chakraborty, Ravi Sekhar. “The Specter of Self-Organization”. Algorithmic Culture: How Big Data and Artificial Intelligence Are Transforming Everyday Life, edited by Stefka Hristova, et al., Lexington Books, 2020.

Cohn, Jonathan. "Introduction: Data Fields of Dreams." The Burden of Choice. Rutgers University Press, 2019. 1-26.

Criado-Perez, Caroline. Invisible Women: Data Bias in a World Designed for Men. Abrams Press, 2019.

Dunne, Anthony, and Fiona. Raby. Speculative Everything: Design, Fiction, and Social Dreaming. The MIT Press, 2013.

Ferguson, Russell. "Introduction: Invisible Center." Out There: Marginalization and Contemporary Cultures, edited by Russell et al. New Museum of Contemporary Art, 1990.

Halberstam, Jack. “Introduction: Low Theory.” The Queer Art of Failure, Duke University Press, 2020, pp. 1–26, doi:10.1515/9780822394358-003.

Marchessault, Janine. Marshall McLuhan: Cosmic Media. SAGE, 2005.

Ratto, Matt. “Critical Making: Conceptual and Material Studies in Technology and Social Life.” The Information Society, vol. 27, no. 4, Taylor & Francis Group, 2011, pp. 252–60, doi:10.1080/01972243.2011.583819.

Sharma, Sarah. “A Manifesto for the Broken Machine.” Camera Obscura (Durham, NC), vol. 35, no. 2, DUKE UNIV PRESS, 2020, pp. 170–79, doi:10.1215/02705346-8359652.